Cite this lesson as: Ashtiani, Z. & Deutsch, C. V. (2024). Kriging with Constraints. In J. L. Deutsch (Ed.), Geostatistics Lessons. Retrieved from http://www.geostatisticslessons.com/lessons/krigingconstraints

Kriging with Constraints

Zahra Ashtiani

University of Alberta

Clayton V. Deutsch

University of Alberta

January 11, 2024

Learning Objectives

- Review Simple Kriging as the best linear unbiased estimator

- Understand that constraints can improve estimation in some circumstances

- Appreciate the variety of constraints that can be considered in Kriging

Introduction

Calculating the best estimate of a regionalized variable at an unsampled location is required in many circumstances. Such spatial interpolation has many applications in resource modelling, environmental site characterization and other situations. Several interpolation approaches have been developed, such as low-order polynomials and spline functions (Wahba, 1978), which predominantly depend on mathematical functions. These variables are mostly treated as random variables, even though they result from underlying natural phenomena and cannot be modeled by a single mathematical equation (Oliver & Webster, 1990). Matheron introduced Kriging, which is based on continuous random variables and the configuration of data available for estimation (Matheron, 1962). Kriging is preferable to other techniques because it is considered the best linear unbiased estimator, as it minimizes the error variance. Moreover, its foundation lies in site-specific relationships between the data and between the data and the unsampled location being estimated (Rossi & Deutsch, 2013). Adding appropriate constraints to Kriging can make it more robust and applicable to different scenarios. This Lesson considers a number of different constrained Kriging approaches and their applicability. The following variants are reviewed: 1) Simple Kriging, 2) Ordinary Kriging, which is the most widely used type of Kriging, 3) Ordinary Cokriging, 4) Penalized Kriging, 5) Universal Kriging, and 6) Compositional Kriging. Appreciating the flexibility of adding constraints to Kriging provides insight into other applications.

Best Local Estimate

An important concept in Kriging is a quantitative measure of “best”. The most common measure is minimizing the mean squared error, which is equivalent in practice to maximizing the \(\mathrm{R}^2\) coefficient of determination. A consequence of minimizing squared error is that the estimates will regress to the mean when there are few local data. There are times when a restricted search is applied to anticipate future information by avoiding smoothing the estimate, but this is done at the expense of larger errors.

A second important concept in Kriging is that of a locally linear estimate. Taken together, multiple Kriged estimates are highly nonlinear, but each local estimate is a linear function of the data. Kriging is strongly data driven; the estimates are linear combinations of the data and the weighting of the data is based on an understanding of spatial variability that also comes from the data.

Kriging is applied within stationary domains. Consider a regionalized variable \(\{ \mathrm{Z}(\mathbf{u}), \mathbf{u} \in A \}\), where \(\mathrm{Z}\) denotes the variable or rock property under consideration, \(\mathbf{u}\) denotes a location vector, and \(\mathrm{A}\) denotes the stationary domain under consideration. Kriging requires a model of the spatial variability of the data. The covariance and variogram are two measures for this purpose(Rossi & Deutsch, 2013). The covariance quantifies the similarity of data, and it is calculated by:

\[\mathrm{C}(\mathbf{h})=\mathrm{E}\{\mathrm{Z}(\mathbf{u}) \cdot \mathrm{Z}(\mathbf{u}+\mathbf{h})\}-\mathrm{m}^2\]

Where \(\mathbf{h}\) represents a lag vector, and \(\mathrm{m}\) is the mean of the data. This function must be defined for any lag vectors between the data and between unsampled locations. The variogram represents the dissimilarity of data:

\[2 \gamma(\mathbf{h})=\mathrm{E}\left\{[\mathrm{Z}(\mathbf{u})-\mathrm{Z}(\mathbf{u}+\mathbf{h})]^2\right\}\]

Common practice in geostatistics is to calculate experimental variogram values for lag vectors in principal directions, then fit them with a valid variogram model. The model reduces noise in the experimental calculations and provide the variogram for all possible lag vectors. An assumption of stationarity allows the covariance to be calculated from the variogram: \(\mathrm{C}(\mathbf{h})=\sigma^2-\gamma(\mathbf{h})\), where \(\sigma^2\) is the stationary variance.

Consider estimation at an unsampled location \(\mathbf{u}\) considering \(\mathrm{n}\) local data denoted as \(\mathrm{z}\left(\mathbf{u}_{\mathrm{i}}\right)\). Simple Kriging is the first approach. In this method, the constant stationary mean is considered in the estimate:

\[\mathrm{z}_{\mathrm{SK}}^{*} (\mathbf{u})-\mathrm{m}=\sum_{\mathrm{i}=1}^{\mathrm{n}} \lambda_{\mathrm{i}}(\mathbf{u}).\left[\mathrm{z}\left(\mathbf{u}_{\mathrm{i}}\right)-\mathrm{m}\right]\]

Where \(\mathrm{z}_{\mathrm{SK}}^{*}(\mathbf{u})\) is the Simple kriging estimator at the unsampled location. The \(\lambda_{\mathrm{i}}(\mathbf{u}) \text{ for }\mathrm{i}=1,\ldots,\mathrm{n}\) values are the weights that each data receive. The error variance is minimized yielding the following system of equations to compute the weights:

\[\sum_{\mathrm{j}=1}^\mathrm{n} \lambda_\mathrm{j}(\mathbf{u}) \cdot \mathrm{C}\left(\mathbf{u}_{\mathrm{j}}, \mathbf{u}_{\mathrm{i}}\right)=\mathrm{C}\left(\mathbf{u}_{\mathrm{i}}, \mathbf{u}\right) \quad \text{for} \quad \mathrm{i}=1, \ldots, \mathrm{n}\]

The \(\mathrm{C}\left(\mathbf{u}_{\mathrm{j}}, \mathbf{u}_{\mathrm{i}}\right)\) values are the covariance values between each pair of data and the \(\mathrm{C}\left(\mathbf{u}_{\mathrm{i}}, \mathbf{u}\right)\) values are the covariance between the unsampled location and the data locations. The known mean receives more weight if the data receive less weight. The minimized estimation variance may also be calculated:

\[\sigma_\mathrm{E}^2(\mathbf{u})=\sum_{\mathrm{i}=1}^\mathrm{n} \sum_{\mathrm{j}=1}^\mathrm{n} \lambda_\mathrm{i}(\mathbf{u}) \lambda_\mathrm{j}(\mathbf{u}) \mathrm{C}\left(\mathbf{u}_\mathrm{i}, \mathbf{u}_\mathrm{j}\right)-2 \cdot \sum_{\mathrm{i}=1}^\mathrm{n} \lambda_\mathrm{i}(\mathbf{u}) \mathrm{C}\left(\mathbf{u}_\mathrm{\mathrm{i}}, \mathbf{u}\right)+\sigma^2\]

The mathematics of kriging is well documented in many references including Cressie (2015), Olea (2012), and McLennan, Leuangthong, & Deutsch (2006). Note that the weights must be recalculated for each unsampled location. This unconstrained optimization, known as Simple Kriging, calculates estimates that are a combination of the data and the global mean.

Lagrange Formalism

Optimization problems usually come with some constraints. In many cases, these constraints can be expressed as linear functions of the parameters. The Lagrange formalism can be invoked to solve constrained optimization. The premise being that the optimum value of the objective function occurs at its extreme points. At these points, the gradient of the objective function is a proportion of the gradient of the constraints (Beavis & Dobbs, 1990). The general form of a constrained optimization problem can be written as:

\[\text{obj } \mathrm{f}(\mathbf{x})\]

\[\text{s.t. } \mathrm{g}_{\mathrm{i}} (\mathbf{x})= 0 \quad \text{for } \mathrm{i} = 1, \ldots , \mathrm{m}\]

Where the objective function is \(\mathrm{f}(\mathbf{x})\), \(\mathrm{g}_{\mathrm{i}} (\mathbf{x}) = 0\) are constraints, and \(\mathrm{m}\) is the number of constraints. The unconstrained form of the problem using Lagrange multipliers is as follows:

\[\mathrm{h}(\mathbf{x})= \mathrm{f}(\mathbf{x})- \sum_{\mathrm{i}=1}^{\mathrm{m}} \mu_{\mathrm{i}} [\mathrm{g}_\mathrm{i}(\mathbf{x})]\]

\(\mu_\mathrm{i}\) are the Lagrange multipliers. Where the partial derivative of \(\mathrm{h}(\mathbf{x})\) is zero, the objective function reaches its extreme point. The partial derivative of \(\mathrm{h}\) is:

\[\mathrm{h}_\mathbf{x}= \mathrm{f}_\mathbf{x} - \sum_{\mathrm{i}=1}^\mathrm{m} \mu_{\mathrm{i}} [\mathrm{g}_{\mathrm{i}_\mathbf{x}}] = 0 .\]

\(\mathrm{f}_\mathbf{x}\) and \(\mathrm{g}_\mathbf{x}\) are the partial derivatives of \(\mathrm{f}(\mathbf{x})\) and \(\mathrm{g}(\mathbf{x})\) with respect to \(\mathbf{x}\), respectively (Hoffmann, Bradley, & Rosen, 1989).

Ordinary Kriging

Among different variations of Kriging, Ordinary Kriging is arguably the most common approach(Bazania & Boisvert, 2023). When the global mean is not considered locally reliable, Ordinary Kriging becomes useful for estimating the value of the variable at unsampled locations. This approach employs a search neighborhood at each location. The core concept of Ordinary Kriging is to implicitly estimate the local constant mean of the data values within the search neighborhood to calculate residuals. Simple Kriging, then, utilizes these residuals for estimation (Pyrcz & Deutsch, 2014). This process is achieved in one step with the Ordinary Kriging equations.

The estimated value at the unsampled location using Ordinary Kriging is expressed as:

\[\mathrm{z}_{\mathrm{OK}}^{*} (\mathbf{u})=\sum_{\mathrm{i}=1}^{\mathrm{n}} \lambda_{\mathrm{i}}(\mathbf{u}) \mathrm{z}\left(\mathbf{u}_{\mathrm{i}}\right)+\left[1-\sum_{\mathrm{i}=1}^{\mathrm{n}} \lambda_{\mathrm{i}}(\mathbf{u})\right]\mathrm{m}\]

Where the sum of weights equals one, the resulting estimate is unbiased, and does not use the global mean. The Lagrangian form of the system of equations, after taking partial derivatives, is as follows:

\[\left\{\begin{array}{c}\sum_{\mathrm{j}=1}^\mathrm{n} \lambda_\mathrm{j}(\mathbf{u}) \mathrm{C}\left(\mathbf{u}_{\mathrm{j}}, \mathbf{u}_{\mathrm{i}}\right)+\mu=\mathrm{C}\left(\mathbf{u}_{\mathrm{i}}, \mathbf{u}\right) \text{ for } \mathrm{i}=1, \ldots, \mathrm{n} \\ \sum_{\mathrm{j}=1}^\mathrm{n} \lambda_\mathrm{j}(\mathbf{u})=1\end{array}\right.\]

Where \(\mu\) represents the Lagrange multiplier. Solving this system of equations yields the weights that minimize the error variance (Rossi & Deutsch, 2013). The weights obtained through Ordinary Kriging differ from those of Simple Kriging. As Ordinary Kriging works within search neighborhoods, the mean is considered constant within the neighborhoods rather than across the entire domain. Consequently, it is quasi-stationary rather than strictly stationary (Olea, 2012).

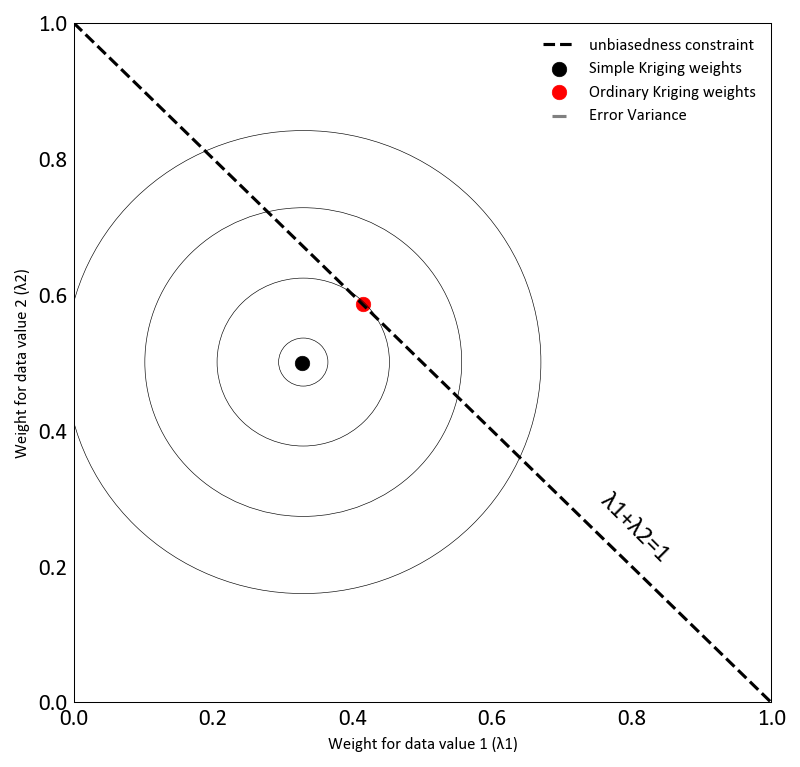

Figure 1 compares the weights obtained through Ordinary and Simple Kriging for two sample points. It is evident from the figure that Simple Kriging yields the minimum possible variance. Conversely, Ordinary Kriging produces a set of weights that minimizes the variance while ensuring that their sum is equal to one. The black dashed line in the figure represents the unbiasedness constraint. The gray circular contours represent increasing error variance contours as they move away from the minimum simple kriging solution.

Ordinary Cokriging

There are situations where secondary data are available, that is, measurements with greater error or of related rock properties. Expanding the kriging equations to include secondary data leads to the technique known as Cokriging. Incorporation of secondary variables entails presence of spatial correlation between primary and secondary variables(Rossi & Deutsch, 2013). The estimate at an unsampled location is expressed as:

\[ \mathrm{z}_{\mathrm{OC}}^*(\mathbf{u})=\sum_{\alpha_1=1}^{\mathrm{n}_1} \lambda_{\alpha_1}(\mathbf{u}) \mathrm{z}\left(\mathbf{u}_{\alpha_1}\right) + \sum_{\alpha_2=1}^{\mathrm{n}_2} \lambda_{\alpha_2}^{\prime}(\mathbf{u}) \mathrm{y} \left(\mathbf{u}_{\alpha_2}^{\prime}\right).\]

Where \(\lambda_{\alpha_1}(\mathbf{u})\) and \(\lambda_{\alpha_2}^{\prime}(\mathbf{u})\) are the weights assigned to the primary data and the secondary data, respectively. \(\mathrm{z}\left(\mathbf{u}_{\alpha_1}\right)\) is the value of primary data, and \(\mathrm{Y} \left(\mathbf{u}_{\alpha_2}^{\prime}\right)\) is the secondary data value at \(\mathbf{u}_{\alpha_2}^{\prime}\). \(\mathrm{n}_1\) represents the number of primary data, and \(\mathrm{n}_2\) stands for the number of secondary data.

Simple Cokriging can be employed under the condition that the mean values for all data types are known. Ordinary Cokriging can be utilized to relax this requirement. Ordinary Cokriging constrains the sum of weights to each data type to ensure an unbiased estimate. There are two variants of Ordinary Cokriging.

The traditional method of Ordinary Cokriging is to separately constrain the sum of weights to primary and secondary variables. The weights assigned to the primary variable are constrained to sum to one, and the sum of weights assigned to each secondary variable should equal zero. These constraints can be rewritten as \(\sum_{\alpha_1} \lambda_{\alpha_1}(\mathbf{u})=1\) and \(\sum_{\alpha_2} \lambda_{\alpha_2}^{\prime}(\mathbf{u})=0.\) A Lagrange multiplier is required for the primary weight constraint and additional Lagrange multipliers are required for each secondary variable. Traditional Ordinary Cokriging is subject to negative weights and small weights to secondary data that diminish the influence of secondary data.

The preferred method over Ordinary Cokriging is Standardized Ordinary Cokriging (SOCK), as it avoids driving the weights of secondary data to zero. In SOCK, the sum of all weights is constrained to one, and it involves the utilization of standardized variables. The standardized value of a variable is: \[\mathrm{Y} =\frac{\mathrm{Z}-\mu}{\sigma}\] Here, \(\mu\) and \(\sigma\) represent mean and variance of the variable, respectively. The estimator for SOCK is written as: \[\sum_{\alpha_1=1}^{\mathrm{n}_1} \lambda_{\alpha_1}(\mathbf{u})+\sum_{\alpha_2=1}^{\mathrm{n}_2} \lambda_{\alpha_2}^{\prime}(\mathbf{u})=1.\] This approach requires one constraint and one Lagrange multiplier.

Penalized Kriging

In some cases, large positive weights in Ordinary Kriging are counterbalanced by negative weights maintaining the sum of weights equal to one. Although these weights are theoretically correct, they may present practical challenges in presence of outliers. Penalized Kriging tackles this issue by introducing an additional term to regulate the magnitude of the weights (Rivoirard & Romary, 2011). The objective function of this approach is modified to:

\[\mathrm{Q}( {\lambda}( \mathbf{u}_\mathrm{i}), \mu)=\mathrm{\sigma_E^2}+2 \mathrm{\mu}\left[\sum_{ \mathrm{j} =1}^{\mathrm{n}} \mathrm{\lambda_\mathrm{j}}(\mathrm{\mathbf{u}})-1\right]+\sum_{\mathrm{j}=1}^{\mathrm{n}} \mathrm{V} \mathrm{\lambda^2_j}(\mathrm{\mathbf{u}}) \quad \text{for}\quad \mathrm{i}=1, \ldots , \mathrm{n}.\]

The constant value \(\mathrm{V}\) serves as a regulating term, and its value plays a determinative role in achieving the intended results. The derivative form of the objective function:

\[\left\{\begin{array}{l}\lambda_\mathrm{j}(\mathbf{u}) \mathrm{V}+\sum_{\mathrm{i}=1}^\mathrm{n} \lambda_\mathrm{i}(\mathbf{u}) \mathrm{C}\left(\mathbf{u}_{\mathbf{i}}, \mathbf{u}_{\mathbf{j}}\right)+\mu=\mathrm{C}\left(\mathbf{u}_{\mathbf{j}}, \mathbf{u}\right) \quad \text{for}\quad \mathrm{j}=1, \ldots , \mathrm{n} \\ \sum_{\mathrm{j}=1}^\mathrm{n} \lambda_\mathrm{j}(\mathbf{u})=1\end{array}\right.\]

The estimation and estimation variance equation resemble Ordinary Kriging. The value of \(\mathrm{V}\) would be calibrated or chosen by a sensitivity analysis.

Universal Kriging

In some cases, a clear trend in the mean limits the application of Simple and Ordinary Kriging on account of their reliance on the assumption of stationarity. Universal Kriging, also known as Kriging with trend, is designed to consider a functional form of the trend. The assumption is that each data value consists of a residual random function and a deterministic component that can be determined using a polynomial of the coordinates (Wackernagel, 2003). The deterministic part can be rewritten as:

\[\mathrm{m}(\mathbf{u})=\sum_{\mathrm{l}=0}^{\mathrm{L}}\mathrm{a}_{\mathrm{l}}\mathrm{f}_{\mathrm{l}}(\mathbf{u}).\]

Here, \(\mathrm{m}(\mathbf{u})\) is the location dependent mean, and \(\mathrm{a}_\mathrm{l}\) are unknown coefficients that are fitted from the data. The \(\mathrm{f}_{\mathrm{l}}(\mathbf{u})\) terms are elementary functions [Wackernagel, 2003]. Conventionally, \(\mathrm{f}_{0}(\mathbf{u})\) is set to one, in order to include situations where the mean is constant, similar to Ordinary Kriging (Rossi & Deutsch, 2013). Setting the expected value of the estimate equal to the truth can be used to define the system of equations:

\[\mathrm{E}[\mathrm{Z}(\mathbf{u})]=\mathrm{E}[\mathrm{Z}^*(\mathbf{u})].\]

The left-hand side of the above equation is the local mean at the location being estimated:

\[\mathrm{E}[\mathrm{Z}(\mathbf{u})]= \sum_{\mathrm{l}=0}^{\mathrm{L}}\mathrm{a}_{\mathrm{l}}\mathrm{f}_{\mathrm{l}}(\mathbf{u}).\]

In the right-hand side of the unbiasedness equation is the local mean of the estimate:

\[\mathrm{E}[\mathrm{Z}^*(\mathbf{u})]=\sum_{\mathrm{i}=1}^{\mathrm{n}}\lambda_{\mathrm{i}} \sum_{\mathrm{l}=0}^{\mathrm{L}}\mathrm{a}_{\mathrm{l}}\mathrm{f}_{\mathrm{l}}(\mathbf{u}).\]

The ensure unbiasedness, this equality must be satisfied:

\[\sum_{\mathrm{l}=0}^{\mathrm{L}} \mathrm{a}_{\mathrm{l}} \mathrm{f}_{\mathrm{l}}(\mathbf{u})=\sum_{\mathrm{l}=0}^{\mathrm{L}} \mathrm{a}_{\mathrm{l}} \sum_{\mathrm{i}=1}^{\mathrm{n}} \lambda_{\mathrm{i}} \mathrm{f}_{\mathrm{l}}\left(\mathbf{u}_{\mathrm{i}}\right).\]

If \(\mathrm{f}_{\mathrm{l}}(\mathbf{u}) = \sum_{\mathrm{i}=1}^{\mathrm{n}} \lambda_{\mathrm{i}} \mathrm{f}_{\mathrm{l}}\left(\mathbf{u}_{\mathrm{i}}\right)\) for all \(\mathrm{l}=0, \ldots, \mathrm{L}\), the above equation is satisfied. There may be alternative ways that this equality is met, but this is a reasonable alternative. Thus, the system of equation for Universal Kriging:

\[\left\{\begin{array}{c}\sum_{\mathrm{j}=1}^\mathrm{n} \lambda_\mathrm{i}(\mathbf{u}) \mathrm{C}\left(\mathbf{u}_\mathrm{i}, \mathbf{u}_{\mathbf{j}}\right)+\sum_{\mathrm{l}=0}^{\mathrm{L}} \mu_{\mathrm{l}} \mathrm{f}_{\mathrm{l}}\left(\mathbf{u}_{\mathrm{i}}\right)=\mathrm{C}\left(\mathbf{u}_{\mathbf{i}}, \mathbf{u}\right) \quad \text { for } \quad \mathrm{i}=1, \ldots, \mathrm{n} \\ \sum_{\mathrm{j}=1}^{\mathrm{n}} \lambda_{\mathrm{j}} \mathrm{f}_{\mathrm{l}}\left(\mathbf{u}_{\mathrm{j}}\right)=\mathrm{f}_{\mathrm{l}}(\mathbf{u}) \quad \text { for } \quad \mathrm{l}=0, \ldots, \mathrm{L}\end{array}\right.\]

The \(\mu_l\) values in the above equation are the Lagrange multipliers (Wackernagel, 2003). Universal Kriging may be unstable in extrapolation due to the potential instability of the fitted model for the local mean (Pyrcz & Deutsch, 2014).

Kriging with an External Drift

Kriging with external drift is a slight modification of Universal Kriging. Instead of using a polynomial to define the local mean, the value of another attribute can be used. The local mean is defined as:

\[\mathrm{m}(\mathbf{u})=\mathrm{a}_0+\mathrm{a}_1 \mathrm{Y}(\mathbf{u}).\]

Where \(\mathrm{Y}(\mathbf{u})\) represents the secondary variable, and \(\mathrm{a}_0\) and \(\mathrm{a}_1\) are parameters for fitting the local mean. The secondary variable should be linearly related to the primary variable. Additionally, (1) it should demonstrate smooth variation between points since it represents the mean, and (2) the values of the secondary variable must be available at all locations. The application of Kriging with External Drift is limited in the mining industry due to the absence of a secondary variable with a linear relationship to mineral grades. However, it has been utilized in other areas such as predicting air quality, estimating temperatures, and seismic data integration (Rossi & Deutsch, 2013).

Compositional Kriging

When estimating compositional data, all values should be non-negative and sum to a specific constant, typically 1 or \(100\%\). For instance, when estimating the thickness of various soil layers in a domain, the results cannot be negative and the sum of all layer thicknesses must equal the total soil thickness. These two features should be considered, along with accounting for spatial correlation during estimation. The desired estimation can be achieved by incorporating additional constraints into the Kriging equations. These constraints can be written as:

\[\sum_{\mathrm{i}=1}^\mathrm{n} \lambda_\mathrm{i}^\mathrm{k}(\mathbf{u}) \mathrm{z}^\mathrm{k}\left(\mathbf{u}_\mathrm{i}\right) \geq 0 \quad \text { for } \quad \mathrm{k}=1, \ldots, \mathrm{K} \\\]

\[1-\sum_{\mathrm{k}=1}^\mathrm{K} \sum_{\mathrm{i}=1}^\mathrm{n} \lambda_\mathrm{i}^\mathrm{k} (\mathbf{u}) \mathrm{z}^\mathrm{k}\left(\mathbf{u} _\mathrm{i}\right)=0 \\ . \]

Here, \(\lambda_\mathrm{i}^\mathrm{k}\) is the weight corresponding to variable \(\mathrm{k}\) of the data at \(\mathbf{u}_\mathrm{i}\). \(\mathrm{z}^\mathrm{k}\left(\mathbf{u}_\mathrm{i}\right)\) is the value of variable \(\mathrm{k}\) of the data at \(\mathbf{u}_\mathrm{i}\). The \(\mathrm{K}\) and \(\mathrm{n}\) represent the number of variables and the number of data points respectively. For the sake of simplicity, the desired sum is considered one.

As the problem at hand involves optimization with both equality and inequality constraints, it necessitates a solution through the Kuhn-Tucker approach(Pawlowsky-Glahn, Egozcue, & Tolosana-Delgado, 2015). The Kuhn-Tucker representation of Compositional Kriging is as follows:

\[\sum_{\mathrm{i}=1}^\mathrm{n} \mathrm{C}^\mathrm{k} (\mathbf{u}_\mathrm{i},\mathbf{u}_\mathrm{j}) \lambda_\mathrm{i}^\mathrm{k}(\mathbf{u})+\mu^\mathrm{k}+\beta^\mathrm{k}\mathrm{z}^\mathrm{k}\left(\mathbf{u}_\mathrm{j}\right)+\alpha^\mathrm{k} \mathrm{z}^\mathrm{k}\left(\mathbf{u}_\mathrm{j}\right)= \mathrm{C}^\mathrm{k} (\mathbf{u}_\mathrm{j},\mathbf{u}) \text { for } \mathrm{j}=1, \ldots, \mathrm{n} ; \mathrm{k}=1, \ldots, \mathrm{K} \\\]

\[ \sum_{\mathrm{i}=1}^\mathrm{n} \lambda_\mathrm{i}^\mathrm{k}(\mathbf{u})=1 \quad \text{for}\quad \mathrm{k}=1, \ldots, \mathrm{K} \\ \]

\[\sum_{\mathrm{k}=1}^\mathrm{K} \sum_{\mathrm{i}=1}^\mathrm{n} \lambda_\mathrm{i}^\mathrm{k} (\mathbf{u}) \mathrm{z}^\mathrm{k}\left(\mathbf{u} _\mathrm{i}\right)=1 \\\]

\[\sum_{\mathrm{i}=1}^\mathrm{n} \lambda_\mathrm{i}^\mathrm{k}(\mathbf{u}) \mathrm{z}^\mathrm{k}\left(\mathbf{u}_\mathrm{i}\right) \geq 0 \quad \text{for}\quad \mathrm{k}=1, \ldots, \mathrm{K} \\\]

\[\alpha^\mathrm{k} \leq 0 \quad \text{for}\quad \mathrm{k}=1, \ldots, \mathrm{K} \\\]

\[\alpha^\mathrm{k}\left(\lambda_\mathrm{i}^\mathrm{k} (\mathbf{u}) \mathrm{z}^\mathrm{k}\left(\mathbf{u}_\mathrm{i}\right)\right)=0 \quad \text{for}\quad \mathrm{k} =1, \ldots, \mathrm{K} \\\]

The first constraint is introduced into the system of equations to prevent bias, with \(\mu\) as the associated Lagrange multiplier. Similarly, Lagrange multipliers \(\alpha\) and \(\beta\) are invoked to enforce non-negativity and summation constraints, respectively.

The concept of active constraints can be utilized for solving this optimization problem (Wismer & Chattergy, 1978). Theil and Van de Panne is an iterative approach for finding active constraints. The first step for this approach is solving the problem without considering inequality constraints. Then, new equality constraints are introduced for violated constraints. This iterative process continues until there is no violated constraint (Walvoort & Gruijter, 2001).

Summary

This Lesson reinforced that Kriging is a widely used data-driven estimator in geostatistics. It is based on continuous random variables, measures of spatial dependency inferred from the data, and data geometry for determining the weighting of each data relative to each unsampled location. The Simple Kriging method requires a constant and known mean throughout the domain. In practical circumstances, various constraints for Kriging are introduced. Ordinary Kriging is widely used when the global mean is unknown. Penalized Kriging adds a constraint to control the weights. Universal Kriging deals with non-stationary data using polynomials for defining the local mean. Kriging with External Drift incorporates a secondary variable to model the spatial trend. Compositional Kriging addresses interpolation with compositional data, ensuring non-negativity and maintaining summation constraints.

The interactive figure below illustrates the estimated values using various kriging approaches. A notable distinction emerges in the extrapolation segment of them. Specifically, Penalized Kriging, while exhibiting a departure from exactitude at data samples, demonstrates efficacy in extrapolation.

An interactive illustration of the impact of kriging constraints.

There are more Kriging approaches that are not discussed in this Lesson. Indicator Kriging is one of them which works with categorical data. The formalism of constrained Kriging is flexible and useful.